How do evaluation questions relate to the underlying "logic" of the intervention?

2.16 Clearly, the complexity of the relationship(s) involved relates to the question being asked of the evaluation - and here the concept of the intervention "theory" or "logic model" is relevant. Logic models1 describe the relationship between an intervention's inputs, activities, outputs, outcomes, and impacts defined in Table 2.A.

Table 2.A: Definitions of the terms used in logic models2

Term | Definition | Example |

Inputs | Public sector resources required to achieve the policy objectives. | Resources used to deliver the policy. |

Activities | What is delivered on behalf of the public sector to the recipient. | Provision of seminars, training events, consultations etc. |

Outputs | What the recipient does with the resources, advice/ training received, or intervention relevant to them. | The number of completed training courses. |

Intermediate outcomes | The intermediate outcomes of the policy produced by the recipient. | Jobs created, turnover, reduced costs or training opportunities provided. |

Impacts | Wider economic and social outcomes. | The change in personal incomes and, ultimately, wellbeing. |

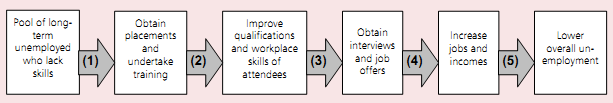

2.17 Box 2.C presents a simplified logic model for a hypothetical intervention to reduce unemployment by increasing training. There are a number of steps in the intervention through which it is supposed to achieve its aims. As the number of steps increases, the complexity of the intervention also increases, as does the number of factors which could be driving any observed changes in outcomes, and the period of time over which they might be observed. But between any two given steps (e.g. link (1) in Box 2.C), the relationships are much simpler and there are fewer factors "at play". Hence, the importance of estimating a reliable counterfactual is reduced when the number of steps is lower, and increased as it rises. The relative suitability of process and impact evaluation for answering questions relating to how the intervention performed similarly is also likely to change with the number of steps.

Box 2.C: Formulating an evaluation: an example As an example, suppose an evaluation is being planned for a job training scheme which is intended to provide placements for long-term unemployed people in companies where they can gain marketable skills and qualifications. The scheme aims to increase the number of interviews and job offers the participants receive, thereby increasing the number in jobs and their incomes. There might ultimately be a reduction in overall unemployment. A simplified intervention logic would be:

A number of evaluation questions arise from link (1) in the chain. For example, how were people recruited onto the scheme? What proportions were retained for the duration of their placement? For how long had they been unemployed before starting? Link (2) might give rise to questions such as: what change was there in participants' skills and qualifications? Link (3) might describe the type and number of job offers obtained, and the characteristics of those participants obtaining them. But it might also involve assessing whether any improvement in skills contributed to participants gaining those interviews and job offers. Link (4) might measure the increase in the number and type of jobs, and the incomes of participants. There might also be interest in knowing whether the scheme generated genuinely new jobs, or whether participants were simply taking jobs that would otherwise have been offered to others. Questions of interest under link (5) might include whether the scheme made any contribution to overall employment levels, either locally or nationally, taking account of economic conditions and trends. There might also be some attempt to measure the impact of the scheme on local economic performance and gross domestic product. |

2.18 So using the example in Box 2.C, a process evaluation might be suitable for finding out which participants obtained which types of employment and what their characteristics were (link (4)). But this information would also be extremely valuable (and perhaps even necessary) to answer the question, "Did the training intervention increase participants' employment rates and incomes?", where the large number of possible factors affecting the result would mean that only impact evaluation is likely to be able to generate a reliable answer.

2.19 However, if the question is, "To what extent was the scheme successful in getting participants onto placements?", a process evaluation might be quite sufficient on its own. If participants were not accessing those placements previously, it might be reasonable to assume that any observed increase was down to the scheme. There might be some need to account for any "displacement" (e.g. participants switching from other placements they might have previously accessed), but if participants' training histories are reasonably known and stable, the chance that some other factor might have caused some sudden change in behaviour might be considered low. With such a simple question, although an impact evaluation might obtain a more robust answer, it might not add much more than could be achieved by a process evaluation.

2.20 Finally, the question might be, "What effect has the scheme had on overall unemployment?" (effectively links 1 -5). The great many factors which determine overall unemployment (macro-economic conditions, the nature of local industries, and so on) would suggest that only an impact evaluation could feasibly secure an answer. However, with such a complex relationship, the chance of the effects of a single training scheme showing up in measures of even quite local employment could be very small, unless the scheme represents a very significant change of policy and injection of resources operating over a considerable length of time. Even then, even a very strong, intensive impact evaluation might not be able to detect an effect amidst all the other drivers of the outcome.

_______________________________________________________________________

1 For further information, the Department for Transport's Hints and Tips guide to logic mapping is a practical tool which can aid understanding and the process of developing logic models. Logic mapping: hints and tips, Tavistock Institute for Department for Transport, October 2010. http://www.dft.gov.uk/

2 Evaluation Guidance for Policy makers and Analysts: How to evaluate policy interventions, Department for Business Innovation and Skills, 2011